Letta 0.12 (current bug version 0.12.1) is out! This is our first release in quite some time, and it comes with a litany of bug fixes and improvements.

See it on Docker here.

Of these, users should focus on the new agent architecture letta_v1_agent. This should resolve a substantial amount of issues related to:

- Open weight providers like OpenRouter or Groq and local providers like Ollama not providing tool calls, leading to unusable agents

- Claude agents providing only reasoning with no messages

- GPT-5 models performing poorly

We recommend updating to this new architecture going forward. You can create new agents with this architecture using the SDK:

Python

client.agents.create(agent_type="letta_v1_agent")

Typescript:

await client.agents.create({agentType: "letta_v1_agent");

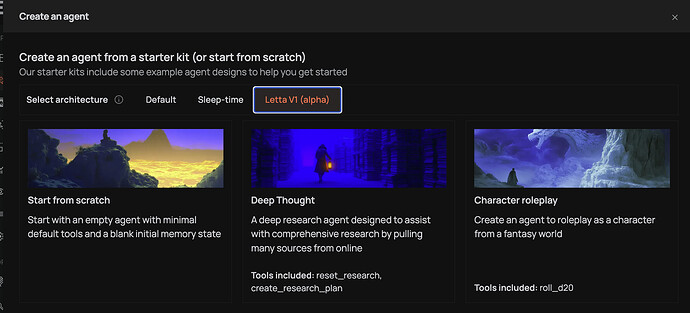

These agents can also be created in the ADE by selecting the Letta (v1) Alpha tab in the agent creation view:

This should be available on self-hosted. We are slowly rolling this feature out across Letta Cloud users now, so please stay tuned.

Major Features

New Agent Architecture: letta_v1_agent

The new recommended agent architecture with significant improvements over the legacy agent system.

What’s Different:

- No

send_messagetool required - Works with any chat model, including non-tool-calling models

- No heartbeat system

- Simpler base system prompt - agentic control loop understanding is baked into modern LLMs

- Follows standard tool calling patterns (auto mode) for broader compatibility

Provider Support:

- Compatible with all inference providers (OpenRouter, Azure, Together, Ollama, etc.).

- Works with non-tool-calling models.

- Supports OpenAI’s Responses API for drastically improved performance with GPT-5 models.

Trade-offs:

- No heartbeats: Agents won’t independently trigger repeated execution. If you need sleep-time compute or periodic processing, implement this through your own prompting or scheduling.

- Tool rules on messaging: Cannot apply tool rules to agent messaging, i.e. you cannot require a particular tool to be followed by an assistant message.

- Reasoning visibility: Non-reasoning models (GPT-4.1, GPT-4o-mini) will no longer generate explicit reasoning output.

- Reasoning control: Less control over reasoning tokens, which are typically encrypted by providers and cannot be passed between different providers.

Recommendation: Create all new agents as letta_v1_agent. The expanded provider compatibility and simpler architecture make this the best choice for most use cases. Use legacy agents only if you specifically need heartbeats or tool rules on messaging.

Human-in-the-Loop (HITL)

Tools can now require human approval before execution. Set approval requirements via API or in the ADE for greater control over agent actions.

![]() Documentation

Documentation

Parallel Tool Calling

Agents now execute multiple tool calls simultaneously when supported by the inference provider. Each tool runs in its own sandbox for true parallel execution. See the Claude documentation for examples on parallel tool calling.

Runs API

New tracking system providing substantially improved observability and debugging capabilities. Documentation coming soon.

Enhanced Archival Memory (Letta Cloud only)

- Hybrid search: Combines full-text and semantic search

- DateTime filtering: Query memories by time range

- Search API endpoint: Documentation

Improved Pagination

Cursor-based pagination now available across many endpoints for handling large result sets.

New Tools

Memory Omni-Tool

Unified memory interface for more intuitive agent memory management.

fetch_webpage Tool

Utility tool for retrieving LLM-friendly webpage content.

Agent Configuration

Templates & Agentfiles

- Template updates: Templates can now be updated via agentfiles

- Agentfile v2 schema: Now supports groups, folders, etc.

Breaking Changes

Deprecated APIs

get_folder_by_name: Useclient.folders.list(name=...)insteadsourcesroutes: All routes renamed tofolders

Full changelog: GitHub